Attend a Live Product Tour to see Sedai in action.

Register now

Optimize compute, storage and data

Choose copilot or autopilot execution

Continuously improve with reinforcement learning

Serverless computing has many benefits for application development in the cloud. It’s not surprising that given its promise to lower costs, reduce operational complexity, and increase DevOps efficiencies, serverless adoption is gaining more momentum every year. Serverless is now seeing an estimated adoption rate in over 50% of all public cloud customers.

However, one of the prevailing challenges for customers using serverless has been performance, specifically “cold starts.” Here, I want to explore all the popular remedies available to reduce cold starts, their benefits, and pitfalls, and finally, how a new autonomous concurrency solution from Sedai may be the answer to solving cold starts once and for all.

What causes serverless cold starts

To understand cold starts, we must look at how AWS Lambda works. AWS Lambda is a serverless, event-driven computing service. It enables you to run code for nearly any application or backend service without provisioning or managing servers. AWS Lambda speeds and simplifies the development and maintenance of applications by eliminating the need to manage servers and automating operational procedures. The advantage of Lambda is you pay only for what you use.

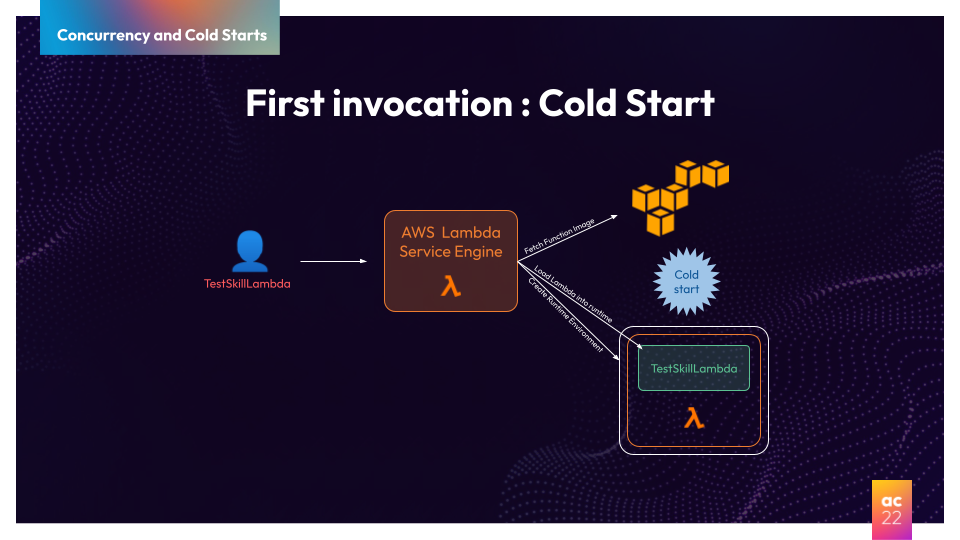

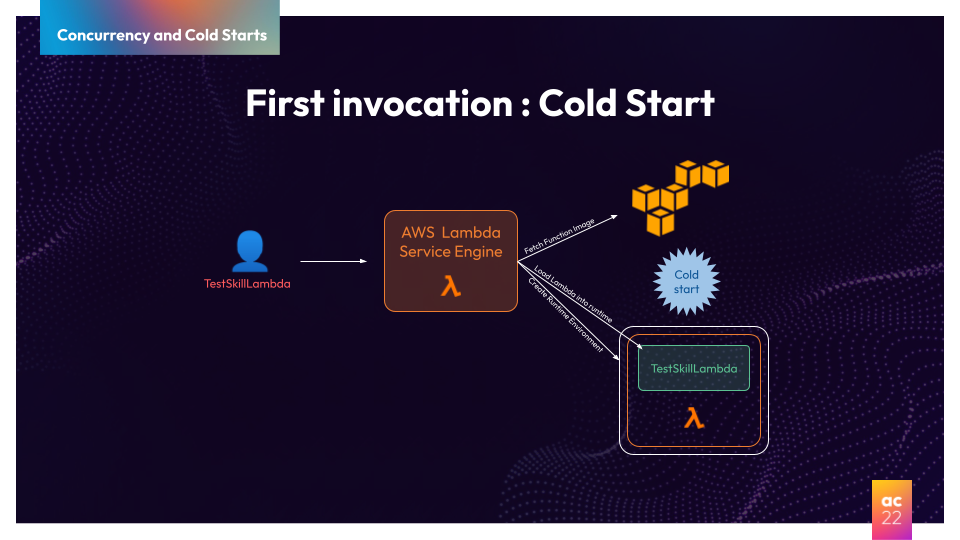

The simplest way to create a Lambda is first to write the code and enter it through the AWS console. Once published, AWS takes your code and, together with the runtime information and required dependencies, packages it as a container and stores it in their Simple Storage Service (S3).

When invoking the created Lambda, the service will first locate the container where the Lambda is stored. Secondly, it sets up all the necessary runtime environments that Lambda needs. Once all this is complete, the service executes the Lambda. All these steps that are required for the first Lambda invocation—getting the cold library code from storage, setting runtimes, preparing, and initialization—constitute what we refer to as a cold start.

When the second invocation occurs, since the Lambda container is already “warmed up,” the service invokes the function without a cold start. However, that is not true for all invocations. AWS must allocate resources for invocations. When the gap between invocations surpasses a given threshold, AWS will shut down the runtime and remove it from memory to conserve its resources.

Cold start in a nutshell

In short, a cold start is a resulting latency from the time required in the first request that a new Lambda worker handles. It’s a consequence of serverless scalability and reflects the startup time needed to warm up runtime to become operational. Cold starts can impact Lambda’s performance, especially for customer-facing applications.

The impact of cold starts on application performance

According to our research, 1.2% of all invocations in a typical implementation result in cold starts. With an average duration between 3 seconds for small JavaScript Lambdas and up to 14 seconds for .NET-based Lambdas, cold starts might not appear to be a concern. However, when considering that this may result in a customer waiting 14 seconds to make a purchase or that the 1.2% occurrence of cold starts leads to a 13% increase in total execution time, cold starts may interfere with your company’s revenue goal and deliver a poor customer experience. Given these considerations, eliminating the impact of cold starts on your infrastructure becomes both an imperative and an opportunity.

.png)

To address cold starts, cloud providers have proposed several approaches to remedy the problem. Two main remedies to reduce cold starts are provisioned concurrency and warmup plugins. Although these do help reduce cold starts, the improvements are insignificant and not scalable. Let’s take a closer look at them.

Concurrency refers to the number of requests a function serves at any given time. When a function is invoked, Lambda allocates an instance of it to process the event. Once the function code finishes running, it can handle another request. However, if the function is invoked again while a request is being processed, another instance will be allocated; this is concurrency. What is the impact of concurrency? The total concurrency for all functions in your account is subject to a per-region quota. Keep in mind, there is also a concurrency limit for Lambda functions defined in a region for your AWS account.

Traditionally, there are two types of concurrency, each with its unique advantages and cost concerns:

As an increasing number of mission-critical applications become serverless, customers want to increase control over the performance of their applications. To help these customers reduce the impact of cold starts, AWS launched provisioned concurrency. Simply put, when provisioned concurrency is enabled for a specific function, the service will initialize a specified number of execution environments to be ready to respond to invocations; this presents a few drawbacks.

Although provisioned concurrency helps reduce cold starts, it also sets up a trade-off because of how costly it can be to keep runtimes up and ready to respond to invocations. For customers, it’s challenging to identify the correct values for provisioned concurrency, but it is also tedious to manage and keep up to date. Autoscaling is another feature provided by cloud providers to alleviate some of these concerns, but this option still increases costs considerably.

Warmup plugins have also been another popular way to reduce cold starts. Warmup plugins reduce cold starts by creating a scheduled Lambda (“the warmer”), which invokes all selected service’s Lambdas in a configured time interval forcing containers to stay warm. However, warmup plugins require coding and detailed configuration to execute appropriately. One drawback with warmup plugins is that they do not adapt well to runtime and other changes requiring one to go back and make additional coding and configuration changes.

Here at Sedai, we recently launched our autonomous concurrency capability as part of Sedai’s autonomous cloud management platform. Autonomous concurrency is an innovative new approach to end nearly all cold starts while optimizing for cost.

Similar to provisioned concurrency, Sedai makes activation calls that keep runtime warm, ensuring your Lambda is ready before the first invocation. Powered by ML, Sedai uses reinforcement learning for autonomous concurrency to take. Sedai’s autonomous concurrency requires just three days of data to form a seasonality pattern to intelligently and dynamically adjust your serverless parameters and execute in your production environment to prevent cold starts from occurring.

Unlike provisioned concurrency, which requires CI/CD and development processes to be stringent, autonomous concurrency works on both versioned and unversioned Lambdas. Sedai’s autonomous concurrency also intelligently computes the requirements for concurrency by dynamically analyzing real-time traffic and seasonality patterns and adjusting as needed. Whereas provisioned concurrency requires upfront user configuration and requires ongoing manual adjustment. Last but not least, there is no risk of cost overruns when using Sedai’s autonomous concurrency; everything is continuously and autonomously optimized. This powerful autonomous concurrency feature allows users to manage concurrency easily and virtually eliminate cold starts. Autonomous concurrency does not require any changes to either configuration or code and can be enabled easily in Sedai.

Users can set up and use Sedai’s autonomous concurrency within 10 minutes without code and planning. There’s no need to know or guess the expected concurrency or make manual adjustments in settings. Because Sedai understands traffic patterns and seasonality, optimizations are done autonomously and behind the scenes. Through observation of actual live invocations of Lambda, Sedai adjusts the number of runtimes and frequency of warmups.

Below is a comparison of how these remedies compare to autonomous concurrency to solving cold starts:

Built into Sedai, autonomous concurrency is a new feature that virtually eliminates cold starts without the risk of cost overruns and any need for code and configuration changes. Like Tesla, with its eight cameras observing your environment while you drive, Sedai’s autonomous concurrency watches and learns your application, infrastructure, and traffic patterns. Once autonomous concurrency is enabled, Sedai identifies lifecycle events, intercepts activation calls, predicts the invocation seasonality, continuously validates, and issues activation calls to update concurrency values for optimal performance and cost.

Autonomous is the future, and it’s here for AWS. Are you ready for it?

November 28, 2022

August 9, 2024

Serverless computing has many benefits for application development in the cloud. It’s not surprising that given its promise to lower costs, reduce operational complexity, and increase DevOps efficiencies, serverless adoption is gaining more momentum every year. Serverless is now seeing an estimated adoption rate in over 50% of all public cloud customers.

However, one of the prevailing challenges for customers using serverless has been performance, specifically “cold starts.” Here, I want to explore all the popular remedies available to reduce cold starts, their benefits, and pitfalls, and finally, how a new autonomous concurrency solution from Sedai may be the answer to solving cold starts once and for all.

What causes serverless cold starts

To understand cold starts, we must look at how AWS Lambda works. AWS Lambda is a serverless, event-driven computing service. It enables you to run code for nearly any application or backend service without provisioning or managing servers. AWS Lambda speeds and simplifies the development and maintenance of applications by eliminating the need to manage servers and automating operational procedures. The advantage of Lambda is you pay only for what you use.

The simplest way to create a Lambda is first to write the code and enter it through the AWS console. Once published, AWS takes your code and, together with the runtime information and required dependencies, packages it as a container and stores it in their Simple Storage Service (S3).

When invoking the created Lambda, the service will first locate the container where the Lambda is stored. Secondly, it sets up all the necessary runtime environments that Lambda needs. Once all this is complete, the service executes the Lambda. All these steps that are required for the first Lambda invocation—getting the cold library code from storage, setting runtimes, preparing, and initialization—constitute what we refer to as a cold start.

When the second invocation occurs, since the Lambda container is already “warmed up,” the service invokes the function without a cold start. However, that is not true for all invocations. AWS must allocate resources for invocations. When the gap between invocations surpasses a given threshold, AWS will shut down the runtime and remove it from memory to conserve its resources.

Cold start in a nutshell

In short, a cold start is a resulting latency from the time required in the first request that a new Lambda worker handles. It’s a consequence of serverless scalability and reflects the startup time needed to warm up runtime to become operational. Cold starts can impact Lambda’s performance, especially for customer-facing applications.

The impact of cold starts on application performance

According to our research, 1.2% of all invocations in a typical implementation result in cold starts. With an average duration between 3 seconds for small JavaScript Lambdas and up to 14 seconds for .NET-based Lambdas, cold starts might not appear to be a concern. However, when considering that this may result in a customer waiting 14 seconds to make a purchase or that the 1.2% occurrence of cold starts leads to a 13% increase in total execution time, cold starts may interfere with your company’s revenue goal and deliver a poor customer experience. Given these considerations, eliminating the impact of cold starts on your infrastructure becomes both an imperative and an opportunity.

.png)

To address cold starts, cloud providers have proposed several approaches to remedy the problem. Two main remedies to reduce cold starts are provisioned concurrency and warmup plugins. Although these do help reduce cold starts, the improvements are insignificant and not scalable. Let’s take a closer look at them.

Concurrency refers to the number of requests a function serves at any given time. When a function is invoked, Lambda allocates an instance of it to process the event. Once the function code finishes running, it can handle another request. However, if the function is invoked again while a request is being processed, another instance will be allocated; this is concurrency. What is the impact of concurrency? The total concurrency for all functions in your account is subject to a per-region quota. Keep in mind, there is also a concurrency limit for Lambda functions defined in a region for your AWS account.

Traditionally, there are two types of concurrency, each with its unique advantages and cost concerns:

As an increasing number of mission-critical applications become serverless, customers want to increase control over the performance of their applications. To help these customers reduce the impact of cold starts, AWS launched provisioned concurrency. Simply put, when provisioned concurrency is enabled for a specific function, the service will initialize a specified number of execution environments to be ready to respond to invocations; this presents a few drawbacks.

Although provisioned concurrency helps reduce cold starts, it also sets up a trade-off because of how costly it can be to keep runtimes up and ready to respond to invocations. For customers, it’s challenging to identify the correct values for provisioned concurrency, but it is also tedious to manage and keep up to date. Autoscaling is another feature provided by cloud providers to alleviate some of these concerns, but this option still increases costs considerably.

Warmup plugins have also been another popular way to reduce cold starts. Warmup plugins reduce cold starts by creating a scheduled Lambda (“the warmer”), which invokes all selected service’s Lambdas in a configured time interval forcing containers to stay warm. However, warmup plugins require coding and detailed configuration to execute appropriately. One drawback with warmup plugins is that they do not adapt well to runtime and other changes requiring one to go back and make additional coding and configuration changes.

Here at Sedai, we recently launched our autonomous concurrency capability as part of Sedai’s autonomous cloud management platform. Autonomous concurrency is an innovative new approach to end nearly all cold starts while optimizing for cost.

Similar to provisioned concurrency, Sedai makes activation calls that keep runtime warm, ensuring your Lambda is ready before the first invocation. Powered by ML, Sedai uses reinforcement learning for autonomous concurrency to take. Sedai’s autonomous concurrency requires just three days of data to form a seasonality pattern to intelligently and dynamically adjust your serverless parameters and execute in your production environment to prevent cold starts from occurring.

Unlike provisioned concurrency, which requires CI/CD and development processes to be stringent, autonomous concurrency works on both versioned and unversioned Lambdas. Sedai’s autonomous concurrency also intelligently computes the requirements for concurrency by dynamically analyzing real-time traffic and seasonality patterns and adjusting as needed. Whereas provisioned concurrency requires upfront user configuration and requires ongoing manual adjustment. Last but not least, there is no risk of cost overruns when using Sedai’s autonomous concurrency; everything is continuously and autonomously optimized. This powerful autonomous concurrency feature allows users to manage concurrency easily and virtually eliminate cold starts. Autonomous concurrency does not require any changes to either configuration or code and can be enabled easily in Sedai.

Users can set up and use Sedai’s autonomous concurrency within 10 minutes without code and planning. There’s no need to know or guess the expected concurrency or make manual adjustments in settings. Because Sedai understands traffic patterns and seasonality, optimizations are done autonomously and behind the scenes. Through observation of actual live invocations of Lambda, Sedai adjusts the number of runtimes and frequency of warmups.

Below is a comparison of how these remedies compare to autonomous concurrency to solving cold starts:

Built into Sedai, autonomous concurrency is a new feature that virtually eliminates cold starts without the risk of cost overruns and any need for code and configuration changes. Like Tesla, with its eight cameras observing your environment while you drive, Sedai’s autonomous concurrency watches and learns your application, infrastructure, and traffic patterns. Once autonomous concurrency is enabled, Sedai identifies lifecycle events, intercepts activation calls, predicts the invocation seasonality, continuously validates, and issues activation calls to update concurrency values for optimal performance and cost.

Autonomous is the future, and it’s here for AWS. Are you ready for it?