Attend a Live Product Tour to see Sedai in action.

Register now

June 19, 2024

May 7, 2024

June 19, 2024

May 7, 2024

Optimize compute, storage and data

Choose copilot or autopilot execution

Continuously improve with reinforcement learning

In environments where applications are not suitable for microservices architectures, rightsizing and in particular vertical scaling becomes a critical strategy to achieve cost-effective operations while meeting performance requirements. This approach involves choosing the right virtual machine type based on the CPU and memory resources required. Rightsizing is a known best practices for Azure VM cost optimization but is hard to implement in practice.

Analysis of the most recently released Azure VM usage dataset (available from Microsoft’s Github account here) shows that Azure VM users had an an average utilization of just 8.2%, with 72% of users having an average utilization of below 20% (see distribution below). A common pattern uncovered in the dataset was the selection of a small number of powerful, but oversized VMs.

Source: “Using Virtual Machine Size Recommendation Algorithms to Reduce Cloud Cost”, March 2023

Below is an example from a Sedai Azure customer environment of a heavily underutilized instance with just a few percent of CPU being used across a two day period:

This is a customer facing metric. Azure and other cloud providers achieve higher rates internally due to operating shared environments (AWS reported 65% utilization a few years ago). The pricing for shared instances reflect this with dedicated instances costing 250% or more.

Effective vertical scaling is also important as developers often default to overprovisioning Azure VM resources, opting for a simpler and quicker setup rather than conducting extensive testing across multiple instance types. This approach, while expedient, typically results in selecting VM configurations that exceed the application's actual requirements, leading to increased costs. The reluctance to engage in detailed testing stems from the time and complexity involved in evaluating each instance type's performance under different workloads. Consequently, developers lean towards a 'better safe than sorry' strategy. Although reducing the risk of underperformance thisinefficiently raises cloud costs.

Many Virtual Machine workloads have bursty traffic patterns, especially for small databases, development environments, and low-traffic websites. For example, in the case below of a dev/test workload CPU utilization stays around 2-4% but surges twice to the 13-15% range.

Given warmup periods may range from a few minutes to a few hours, horizontal scaling may not be viable. Finding suitable burstable instance types may be the preferred approach. In the absence of that, low average utilization will be achieved.

A high proportion of applications running on Azure run directly on virtual machines. These applications have not been replatformed to a microservice architecture such as Kubernetes (including Azure Kubernetes Service (AKS)), or serverless frameworks (Azure Functions). One key reason is that many of these applications do not benefit significantly from the horizontal scaling capabilities offered by microservices architectures. They may have architectural or design constraints that make such a transition complex or suboptimal, limiting their ability to efficiently use newer computing paradigms.

Vertical scaling is also complicated by the many types of VMs available. There are currently over 400 types of Azure Virtual Machine options offering varying Compute, Memory and other characteristics. Below is a chart showing the density of options based on vCPU and Memory size:

Asking an engineer to make the optimal choice across potentially hundreds or thousands of services can be challenging, especially if new code updates change the service's characteristics and then require a new determination of the right instance type.

Vertical scaling is particularly advantageous for applications that require high-performance levels from single instances or have dependencies that complicate distribution across multiple servers. By optimizing the configuration of Azure VMs to align closely with actual workload requirements, organizations can ensure that their applications perform optimally without incurring unnecessary costs from overprovisioning. This method allows for more precise control over resource allocation, leading to enhanced performance and reduced expenditures.

Sedai’s Automated Optimization utilizes advanced AI technology to deeply comprehend Azure VM configurations and their impact on application cost and performance. This results in Azure VMs that are optimally sized and configured to meet the specific needs of applications without incurring unnecessary costs or performance issues. Key benefits include:

Sedai’s Automated Optimization uses advanced AI that not only deeply understands Azure VM configurations and how they are impacting application cost and performance. This results in VMs that are optimally sized and configured to meet the specific needs of their applications without any excess cost or underperformance.

Our AI-driven platform continuously analyzes your Azure VMs to detect inefficiencies. It then autonomously implements optimizations, adjusting resources in real-time without requiring manual intervention.

The Sedai platform operates on a simple yet effective process: Discover, Recommend, Validate, Execute, and Track:

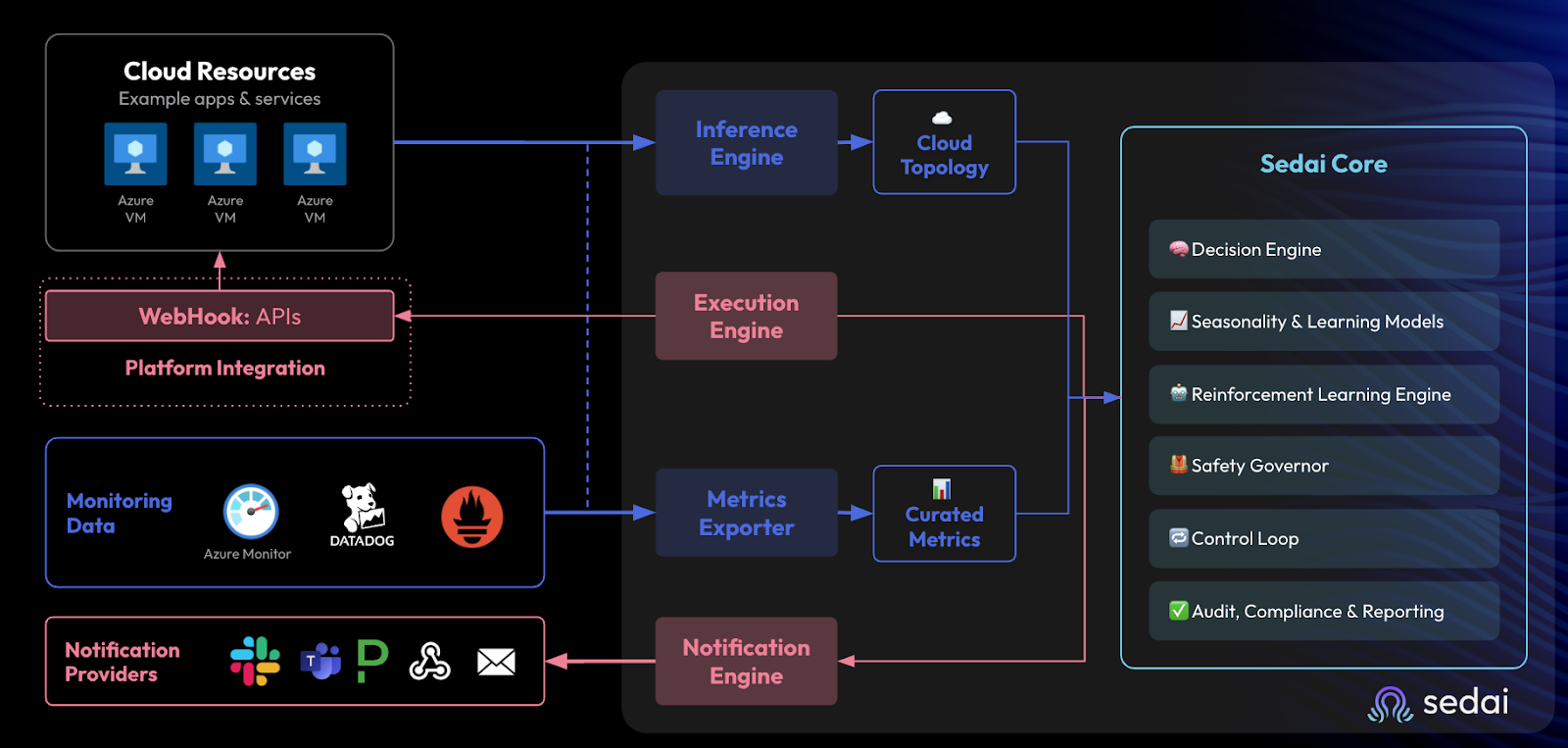

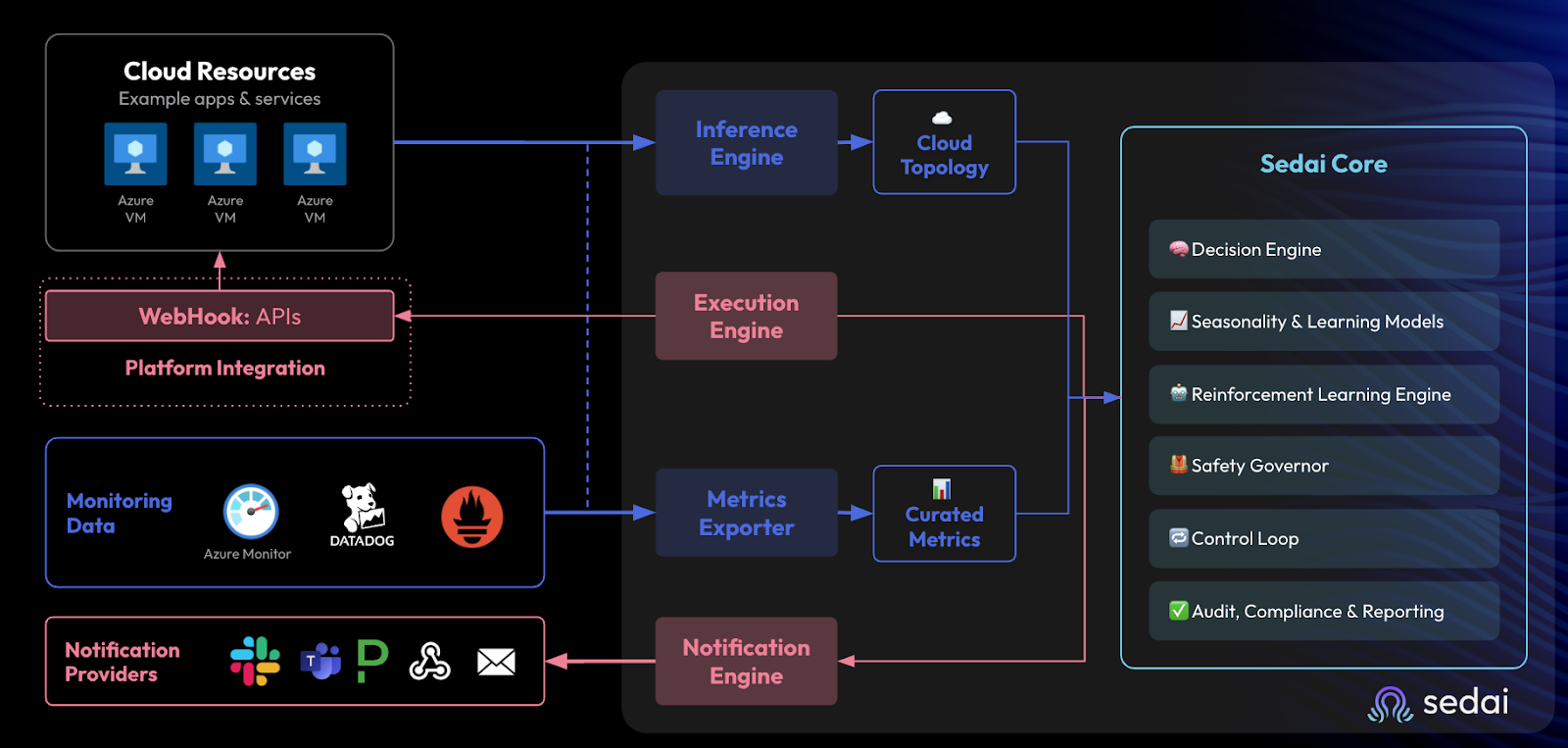

These capabilities form part of Sedai’s overall Azure VM optimization approach which can be seen below:

Some of the key elements above are:

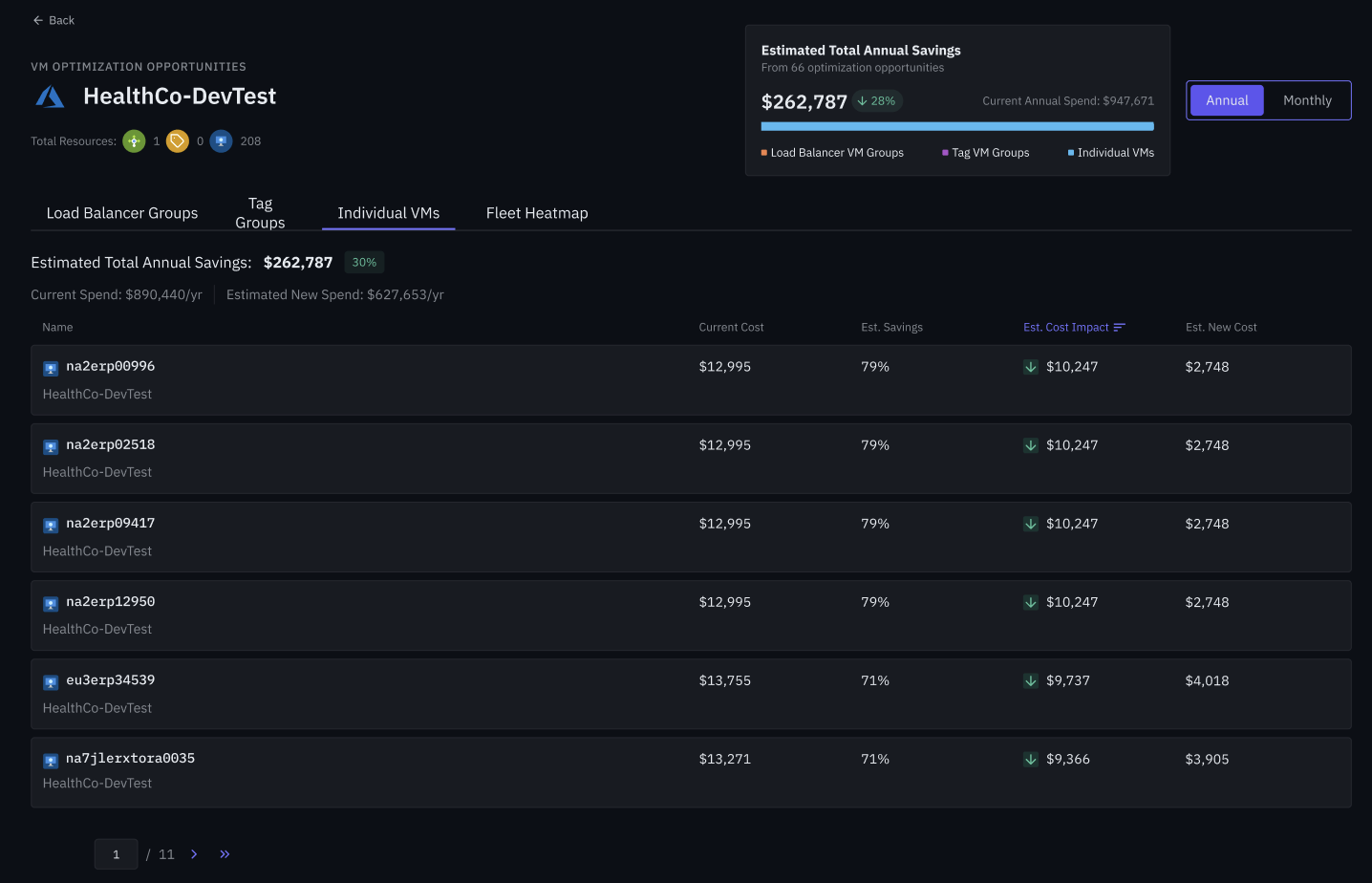

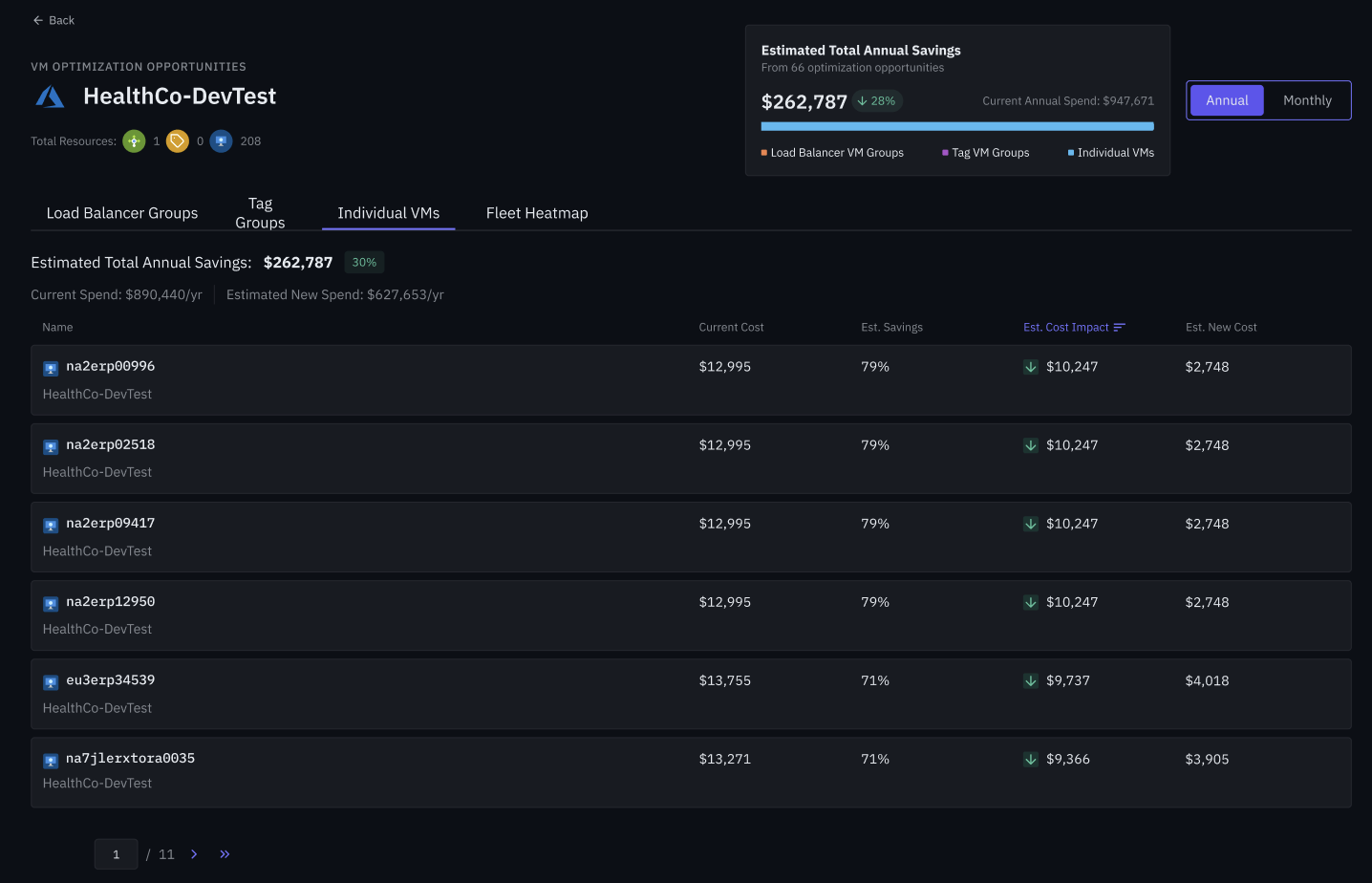

Early adopters have seen significant improvements in both performance and cost efficiency. For instance, a healthcare company has identified over $250k of annual savings, a 28% saving, in its dev / test environments through rightsizing using Sedai’s optimization.

Below is an example of the safety check process being performed during a VM resizing. In this case it took 11 steps, most completed quickly but stopping and restarting the VM taking around 30 seconds each:

To gain insights on the state of your VM fleet you can scan it at a glance to see where applications are over or under provisioned as well as optimized based on Sedai’s findings. The the example below 61% of the apps have been optimized (shown as green).

The service is available now, with flexible pricing based on the scale of your Azure VM deployment. Request a demo to see how Sedai can hello you rightsize your Azure VMs.

May 7, 2024

June 19, 2024

In environments where applications are not suitable for microservices architectures, rightsizing and in particular vertical scaling becomes a critical strategy to achieve cost-effective operations while meeting performance requirements. This approach involves choosing the right virtual machine type based on the CPU and memory resources required. Rightsizing is a known best practices for Azure VM cost optimization but is hard to implement in practice.

Analysis of the most recently released Azure VM usage dataset (available from Microsoft’s Github account here) shows that Azure VM users had an an average utilization of just 8.2%, with 72% of users having an average utilization of below 20% (see distribution below). A common pattern uncovered in the dataset was the selection of a small number of powerful, but oversized VMs.

Source: “Using Virtual Machine Size Recommendation Algorithms to Reduce Cloud Cost”, March 2023

Below is an example from a Sedai Azure customer environment of a heavily underutilized instance with just a few percent of CPU being used across a two day period:

This is a customer facing metric. Azure and other cloud providers achieve higher rates internally due to operating shared environments (AWS reported 65% utilization a few years ago). The pricing for shared instances reflect this with dedicated instances costing 250% or more.

Effective vertical scaling is also important as developers often default to overprovisioning Azure VM resources, opting for a simpler and quicker setup rather than conducting extensive testing across multiple instance types. This approach, while expedient, typically results in selecting VM configurations that exceed the application's actual requirements, leading to increased costs. The reluctance to engage in detailed testing stems from the time and complexity involved in evaluating each instance type's performance under different workloads. Consequently, developers lean towards a 'better safe than sorry' strategy. Although reducing the risk of underperformance thisinefficiently raises cloud costs.

Many Virtual Machine workloads have bursty traffic patterns, especially for small databases, development environments, and low-traffic websites. For example, in the case below of a dev/test workload CPU utilization stays around 2-4% but surges twice to the 13-15% range.

Given warmup periods may range from a few minutes to a few hours, horizontal scaling may not be viable. Finding suitable burstable instance types may be the preferred approach. In the absence of that, low average utilization will be achieved.

A high proportion of applications running on Azure run directly on virtual machines. These applications have not been replatformed to a microservice architecture such as Kubernetes (including Azure Kubernetes Service (AKS)), or serverless frameworks (Azure Functions). One key reason is that many of these applications do not benefit significantly from the horizontal scaling capabilities offered by microservices architectures. They may have architectural or design constraints that make such a transition complex or suboptimal, limiting their ability to efficiently use newer computing paradigms.

Vertical scaling is also complicated by the many types of VMs available. There are currently over 400 types of Azure Virtual Machine options offering varying Compute, Memory and other characteristics. Below is a chart showing the density of options based on vCPU and Memory size:

Asking an engineer to make the optimal choice across potentially hundreds or thousands of services can be challenging, especially if new code updates change the service's characteristics and then require a new determination of the right instance type.

Vertical scaling is particularly advantageous for applications that require high-performance levels from single instances or have dependencies that complicate distribution across multiple servers. By optimizing the configuration of Azure VMs to align closely with actual workload requirements, organizations can ensure that their applications perform optimally without incurring unnecessary costs from overprovisioning. This method allows for more precise control over resource allocation, leading to enhanced performance and reduced expenditures.

Sedai’s Automated Optimization utilizes advanced AI technology to deeply comprehend Azure VM configurations and their impact on application cost and performance. This results in Azure VMs that are optimally sized and configured to meet the specific needs of applications without incurring unnecessary costs or performance issues. Key benefits include:

Sedai’s Automated Optimization uses advanced AI that not only deeply understands Azure VM configurations and how they are impacting application cost and performance. This results in VMs that are optimally sized and configured to meet the specific needs of their applications without any excess cost or underperformance.

Our AI-driven platform continuously analyzes your Azure VMs to detect inefficiencies. It then autonomously implements optimizations, adjusting resources in real-time without requiring manual intervention.

The Sedai platform operates on a simple yet effective process: Discover, Recommend, Validate, Execute, and Track:

These capabilities form part of Sedai’s overall Azure VM optimization approach which can be seen below:

Some of the key elements above are:

Early adopters have seen significant improvements in both performance and cost efficiency. For instance, a healthcare company has identified over $250k of annual savings, a 28% saving, in its dev / test environments through rightsizing using Sedai’s optimization.

Below is an example of the safety check process being performed during a VM resizing. In this case it took 11 steps, most completed quickly but stopping and restarting the VM taking around 30 seconds each:

To gain insights on the state of your VM fleet you can scan it at a glance to see where applications are over or under provisioned as well as optimized based on Sedai’s findings. The the example below 61% of the apps have been optimized (shown as green).

The service is available now, with flexible pricing based on the scale of your Azure VM deployment. Request a demo to see how Sedai can hello you rightsize your Azure VMs.