Attend a Live Product Tour to see Sedai in action.

Register now

AI-driven optimization delivers significant capacity savings and boosts DevOps productivity in a VMware based on-premises environment

Compute cost saving

Optimization time savings

Cloud Cost Optimization

Operations Productivity

App Modernization

Autonomous Optimization

Kubernetes

Rancher

VMware HyperV

Prometheus

ArgoCD

Logistics

North America

The company, one of the world’s ten largest logistics providers, is in the process of containerizing the majority of their applications and they needed to manage a growing number of containerized logistics services as well as innovative new greenfield services. One of the key services was the company’s warehouse management system (WMS), used to manage receiving and storage of goods as well as picking, packing, shipping and inventory tracking. Underlying technologies running on Kubernetes that supported the WMS included Apache Nifi, Postgres, Superset and Apache APISIX.

The company started to face two significant challenges managing their growing Kubernetes environments running on VMware in their on-premises data centers.

The first challenge was ensuring that the Kubernetes services ran efficiently, consuming only the necessary compute resources to optimize existing datacenter capacity and avoid new capital investment. While doing so, the services also needed to meet the relevant SLAs for each service.

The second challenge was to minimize the demands on the team’s resources. The IT operations teams were stretched thin, grappling with the intricacies of managing both VMware infrastructure and Kubernetes clusters. Manually optimizing the myriad of microservices across multiple Kubernetes clusters, each with its own resource requirements, was proving to be a time-consuming and unsustainable task. In the short term, the company was overprovisioning VMware resources to ensure Kubernetes workloads had sufficient headroom, leading to underutilization of expensive on-premises hardware.

In response to these challenges, the company decided to adopt Sedai’s autonomous optimization solution, part of Sedai’s autonomous cloud management platform. Sedai uses AI to optimize a range of services for cost, performance and availability goals.

To meet the company’s specific security requirements and on-premises infrastructure setup, Sedai’s technology was deployed within the company’s own data centers, integrating seamlessly with their VMware-based Kubernetes environments. This on-premises deployment ensured that the company maintained full control over their data and infrastructure.

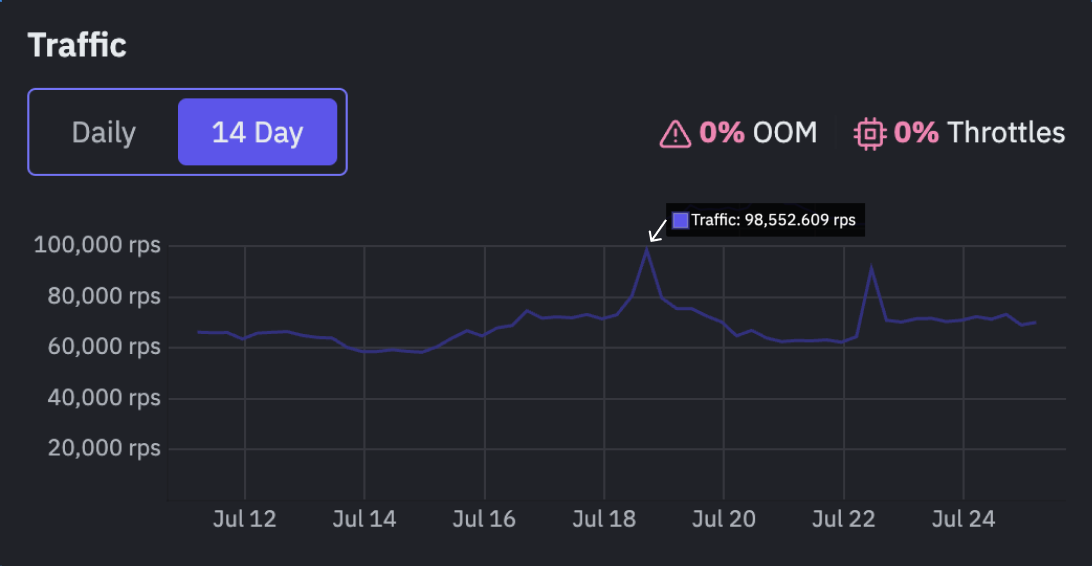

Ultimately Sedai was deployed as a dedicated network on the company’s infrastructure.Sedai was granted permissions to map the Kubernetes cluster topology and analyze application and infrastructure behavior patterns, which was crucial for effective resource optimization. The DevOps team set the resource optimization goals for Sedai, aiming to find the most efficient use of Kubernetes resources while maintaining required performance levels.

Sedai applied AI techniques to determine the optimal workload and cluster configuration, taking into account the Kubernetes resource requirements.

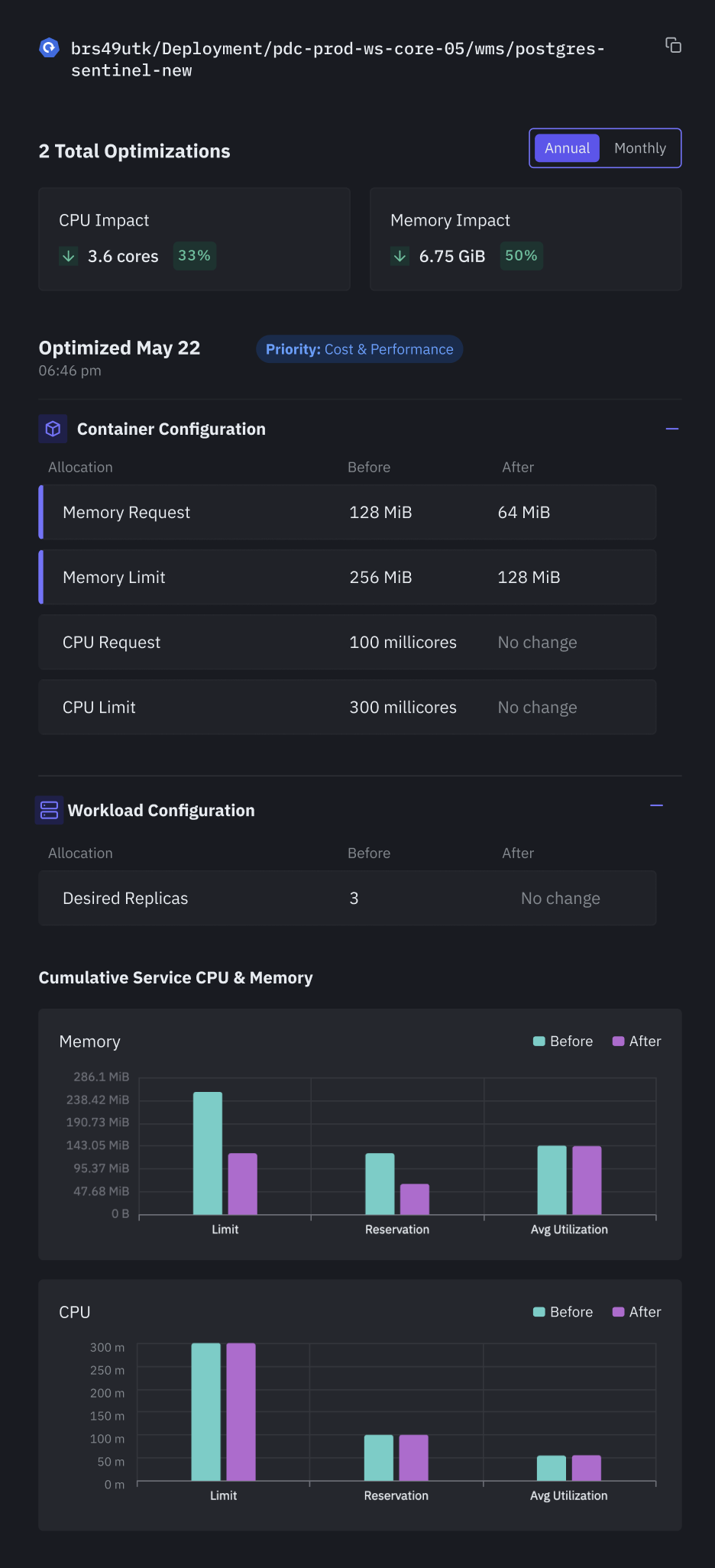

In the example on the right hand side, one of the company’s Kubernetes deployments was analyzed and Sedai discovered it could operate with 33% less CPU and half the amount of memory, following two rounds of optimization. The second optimization shown for May 22 reduces memory requests and limit, while leaving CPU and replica count unchanged.

The implementation of Sedai’s technology allowed the team to make better use of its capacity and help the team be more productive.

From a capacity perspective, Sedai identified a 29% CPU reduction and 48% memory reduction which translated to a 35% overall compute cost reduction. By rightsizing the Kubernetes resources, primarily through adjusting the configurations at both the workload and cluster levels, the company was able to reduce the cluster size, allowing other workloads to use the capacity and deferring datacenter investment.

At the same time, the company was also able to reduce the time spent on Kubernetes optimization by more than 90%, enabling it to operate with a leaner DevOps team.

Together the improvement in capacity efficiency and team productivity puts the company in a strong position to efficiently expand its Kubernetes footprint as the company continues its application modernization journey. The company is also now exploring additional Sedai use cases including autonomous remediation and optimization for virtual machines.