Attend a Live Product Tour to see Sedai in action.

Register now

August 2, 2023

October 26, 2022

August 2, 2023

October 26, 2022

Optimize compute, storage and data

Choose copilot or autopilot execution

Continuously improve with reinforcement learning

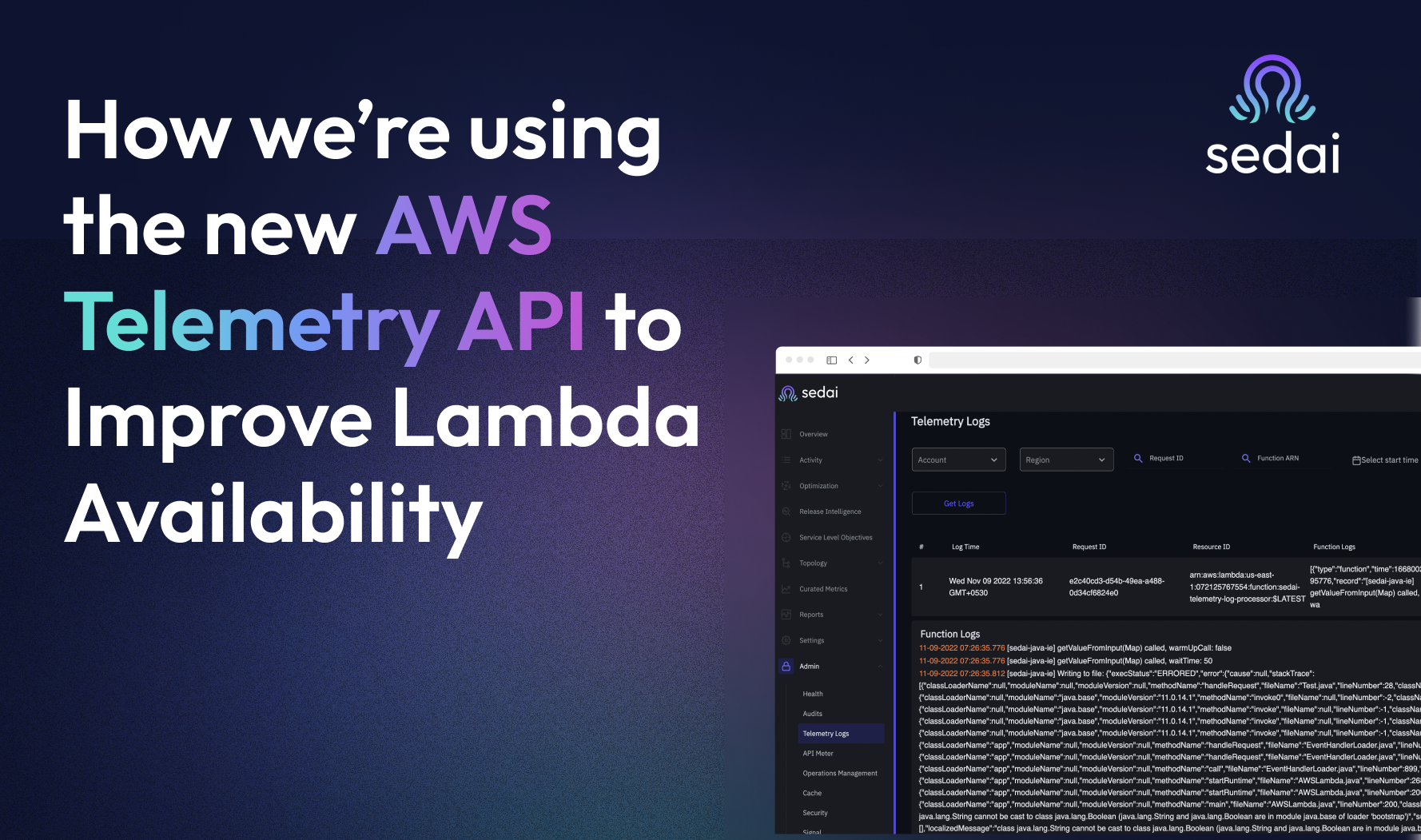

One of the key goals of Sedai’s autonomous cloud management platform is to maximize application availability. AWS Lambda is launching the new Telemetry API, and as a launch partner Sedai uses Telemetry API capabilities to provide additional insights and signals to help improve the availability of customers’ Lambda functions.

The new Lambda Telemetry API enables AWS users to integrate monitoring and observability tools with their Lambda functions. AWS customers and the serverless community can use this API to receive telemetry streams from the Lambda service, including function and extension logs, as well as events, traces, and metrics coming from the Lambda platform itself.

Sedai uses the Telemetry API to help improve Lambda performance and availability for our customers. We see two related use cases for the new capability:

When the Lambda function is running, the function generates the logs. The logs are available to a custom extension that we wrote than runs alongside the function. We can see a lot of useful information from the log about the runtime such as:

Below is an example inside the Sedai platform of logs captured and an example of the detailed error information that can be accessed:

.png)

.png)

We also see additional use cases over time for the Telemetry API as a flexible source of monitoring data.

Top benefits for Sedai customers:

From the perspective of our internal engineering team directly using the Telemetry API we have also seen three major benefits:

Sedai’s serverless platform using the Telemetry API is available now globally for x86-compatible serverless functions. Sign up at app.sedai.io/signup or request a demo at sedai.io. To learn more about the feature read AWS's launch blog here.

October 26, 2022

August 2, 2023

One of the key goals of Sedai’s autonomous cloud management platform is to maximize application availability. AWS Lambda is launching the new Telemetry API, and as a launch partner Sedai uses Telemetry API capabilities to provide additional insights and signals to help improve the availability of customers’ Lambda functions.

The new Lambda Telemetry API enables AWS users to integrate monitoring and observability tools with their Lambda functions. AWS customers and the serverless community can use this API to receive telemetry streams from the Lambda service, including function and extension logs, as well as events, traces, and metrics coming from the Lambda platform itself.

Sedai uses the Telemetry API to help improve Lambda performance and availability for our customers. We see two related use cases for the new capability:

When the Lambda function is running, the function generates the logs. The logs are available to a custom extension that we wrote than runs alongside the function. We can see a lot of useful information from the log about the runtime such as:

Below is an example inside the Sedai platform of logs captured and an example of the detailed error information that can be accessed:

.png)

.png)

We also see additional use cases over time for the Telemetry API as a flexible source of monitoring data.

Top benefits for Sedai customers:

From the perspective of our internal engineering team directly using the Telemetry API we have also seen three major benefits:

Sedai’s serverless platform using the Telemetry API is available now globally for x86-compatible serverless functions. Sign up at app.sedai.io/signup or request a demo at sedai.io. To learn more about the feature read AWS's launch blog here.